What is entity co-occurrence

Entity co-occurrence means two or more named entities show up together in the same part of a text. These entities can be people, places, organizations, or any important terms. If they appear close to each other often—like in the same sentence or paragraph—it suggests they might be connected in meaning.

This idea is widely used in natural language processing and text mining. It helps detect patterns, improves search accuracy, and supports knowledge graph creation. For instance, when the words COVID-19 and vaccine appear together many times in news articles, it points to a likely topic link. But just co-occurring does not mean there is always a true relationship. It is a statistical clue, not a guarantee.

Entity co-occurrence is also useful for relation extraction and boosting how well search systems understand what the text is really about. Tools look for frequent pairings to map how entities interact across topics or documents.

What entity co-occurrence means in text analysis

Entity co-occurrence happens when two or more named entities appear near each other in text more often than random chance. These entities may include people, places, topics, or other key terms.

This idea comes from corpus linguistics, where experts noticed that some terms naturally occur together. These repeated pairings usually point to a semantic link or a shared meaning. For example, the words heart and cardiology often co-occur in medical writing. That pattern shows they are closely connected in the same topic.

Context and meaning in NLP

In natural language processing, the unit of context matters. When two entities appear in the same sentence, the link is usually stronger than if they are just in the same document. A tighter context gives fewer but clearer co-occurrences. A wider one brings more results, but some may be weak or unrelated.

Entity co-occurrence also fits into the distributional hypothesis. This theory says that words used in similar ways often mean similar things. So, if two terms show up together again and again, it likely means they are part of the same idea or field.

Insights from presence or absence

A high frequency of co-occurrence may reveal a hidden relationship. On the other hand, if certain entities never appear together, that gap can also mean something. It could show opposite ideas, or that those entities do not belong in the same topic.

These patterns help in many NLP tasks, such as relation extraction, topic modeling, and building knowledge graphs. By studying how often and where entities appear together, systems can better understand what the text is really about.

How entity co-occurrence is measured and shown in NLP

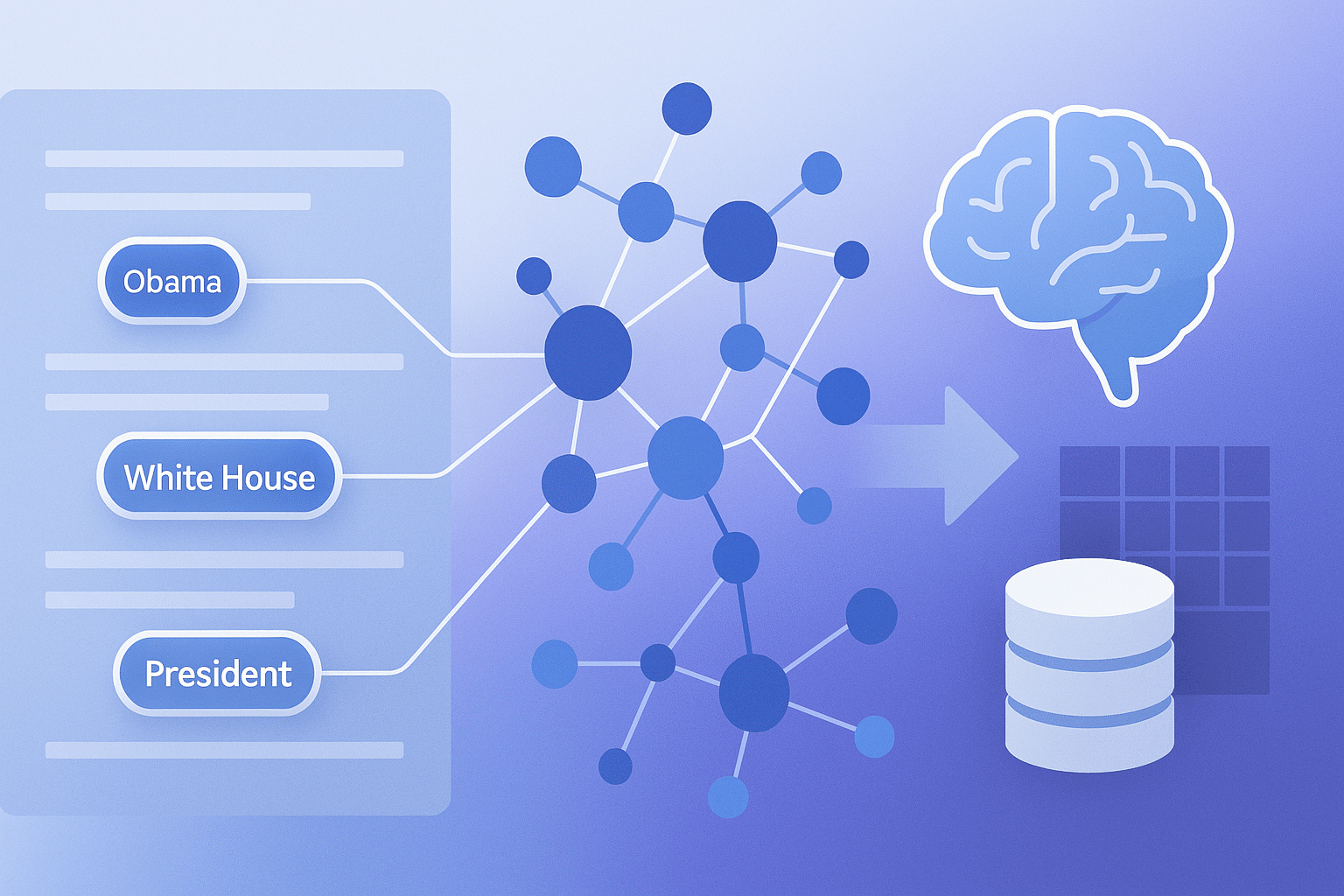

Entity co-occurrence is measured using counts and scores that show how often terms appear together. These values are stored in co-occurrence matrices and adjusted using tools like PMI to highlight meaningful patterns in text.

Raw frequency and co-occurrence matrix

The most basic way to measure entity co-occurrence is by counting how often two entities appear in the same context. These raw frequency counts can be taken at the level of a sentence, paragraph, or document. The data is then arranged into a co-occurrence matrix. This is a table that records how many times each entity pair appears together across a text corpus.

Such matrices form the base of many NLP algorithms. For example, GloVe (Global Vectors for Word Representation) builds a large word–word co-occurrence matrix. It uses this data to generate word embeddings, which help represent semantic similarity between terms.

However, raw counts can be misleading. Very common words or entities may co-occur often simply due to their high frequency, not because of a true link.

Association measures and pointwise mutual information

To solve this, analysts use association measures that adjust for individual frequency. One widely used metric is pointwise mutual information (PMI). PMI checks how likely two entities are to co-occur compared to how likely each is to appear alone. A high PMI score means the entities are seen together more than random chance would suggest. If two common terms co-occur often but not more than expected, PMI gives a low or even negative value.

A positive variant, called PPMI (positive PMI), sets negative scores to zero. It is especially useful in semantic analysis and has been called one of the most effective tools in NLP for spotting meaningful co-occurrence.

Other popular association scores include:

- Log-likelihood ratio (LLR)

- Chi-square test

- Dice coefficient

These methods compare observed co-occurrence with what would be expected statistically, reducing bias from highly frequent terms.

Co-occurrence networks and visualization

Once entity pairs are scored, they can be visualized as co-occurrence networks. In these networks:

- Nodes represent entities or terms.

- Edges show co-occurrence, often weighted by PMI, LLR, or frequency.

- Clusters highlight related entities, like terms tied to a disease and its treatments.

Tools like KH Coder or the BlueGraph library help build and study such networks. These tools can show:

- Which entities are most central (have the most links)

- How tightly terms are grouped (clustering coefficient)

- How quickly information flows across the network (shortest path)

Entity co-occurrence networks are now common in text mining and topic analysis, especially where large digital text collections are available.

How entity co-occurrence is used in NLP and text mining

Entity co-occurrence helps NLP systems find related words, build word embeddings, detect topics, and group similar terms. It also supports tasks like query expansion, collocation extraction, and semantic similarity across large text collections.

Finding semantic similarity

In natural language processing, entity co-occurrence helps find hidden relationships between terms. The idea comes from distributional semantics, which follows the rule: “You know a word by the company it keeps.” If two entities appear often with the same co-occurring partners, they are likely related in meaning.

This concept powers word embeddings like GloVe, which uses a co-occurrence matrix to build vector representations of words. Words that share similar co-occurrence profiles end up with similar vectors, making it easier for systems to compare meanings.

Topic modeling and concept grouping

Co-occurrence patterns help group words into topics. Methods like latent semantic analysis and graph-based clustering rely on how often terms appear together. If many words co-occur across documents, they likely belong to the same subject or theme.

This technique also works in multi-word expression detection. For example, phrases like critical mass or salt and pepper can be picked up by checking how often certain word pairs appear next to each other. If the co-occurrence is much higher than chance, the system marks it as a valid collocation.

Entity relationships in unstructured text

In unstructured data, named entity co-occurrence can suggest links between people, places, or organizations. For instance, if Company X and Person Y appear together often, it may hint at a work relationship, like CEO or founder. But extra steps are needed to confirm the role.

In biomedical text, frequent co-occurrence between a gene and a disease name may signal a possible research link. This pattern can guide further scientific study.

Second-order similarity and category detection

Some entities may not co-occur directly, but still be connected through shared co-occurrence patterns. This is called second-order similarity. For example, aspirin and ibuprofen may not appear in the same sentence, but both often co-occur with words like fever, pain, and inflammation. That link places them in the same class of analgesic drugs.

These patterns help in query expansion, recommendation systems, and thesaurus building, by pointing out closely related terms.

Sentiment patterns and text exploration

Entity co-occurrence is also used in sentiment analysis. If a product name shows up often with words like good, bad, or poor, the system can estimate how people feel about that item.

Finally, co-occurrence helps with text exploration. Analysts can ask simple questions like “Which entities often appear together in this dataset?” The answers often show useful themes or hidden stories inside the text.

How entity co-occurrence helps build knowledge graphs and find relations

Entity co-occurrence is used to guess if two entities are connected by a real-world relationship. It helps build knowledge graphs by linking terms that often appear together, and supports relation extraction in large text datasets

Using co-occurrence as a signal for relation discovery

In information extraction, entity co-occurrence works as an early signal to guess possible relationships. If two entities show up together in text often, it may suggest they are linked in the real world. For example, if Paris and France appear frequently in the same context, a system might suggest a location link. This step is used to generate relation hypotheses, which are later checked with stronger methods.

Early systems used co-occurrence statistics to feed knowledge bases. These systems built an entity–entity graph, where each node was an entity, and edges showed shared context. To avoid false matches from popular terms, edges were often weighted using metrics like pointwise mutual information (PMI) instead of raw frequency.

Weighted graphs and biomedical examples

In large text mining projects, such graphs help identify key associations. For instance, in one system based on COVID-19 literature, entities like genes, diseases, and chemicals were linked if they appeared in the same paper or paragraph. Edges were weighted using both co-occurrence count and PMI, making the graph more useful for biomedical research.

These weighted graphs can be explored with graph algorithms to spot clusters, hubs, or semantic communities. For example, analysts might ask: “Which chemicals co-occur most often with this disease?” The answer can help guide future studies or drug discovery.

Beyond pairs: multi-entity patterns and lists

More advanced techniques look for patterns where multiple entities appear together in a consistent way. For instance, a list of cities and their mayors in text may suggest a shared relation like isMayorOf. If this structure appears across documents, the system can propose a group-based relation without deep grammar checks.

This helps when working with unstructured or noisy data, where rule-based parsing is not practical. It allows relation discovery at scale, using only recurring entity patterns and context alignment.

Limits of co-occurrence and context checks

It is important to note that co-occurrence alone is not enough to confirm a real relationship. For example, if President and Monday appear often together in news stories, it may be due to press events held on Mondays—not because those words are truly related.

Modern relation extraction systems do not rely only on co-occurrence. Instead, they analyze the context between entities. This includes checking the words and grammar that link them, such as whether one is founded by, treats, or works at the other. Systems use these cues to decide if there is a clear semantic link.

Some industrial tools use machine learning models that combine co-occurrence data, linguistic features, and association scores to predict relationships more accurately.

How entity co-occurrence improves search and information retrieval

Entity co-occurrence helps search engines understand how terms are related. It improves query expansion, ranks results more accurately, and suggests better related searches by studying which words often appear together in web content.

Improving query understanding and expansion

In information retrieval, entity co-occurrence helps search systems understand how terms are connected. When search engines scan web pages and past queries, they check which terms often appear together. For example, if many users search for camera lens, and those two words co-occur in documents often, the system learns they are contextually linked.

This helps in query expansion. If someone searches for electric cars, the engine might also look for results that include EV or electric vehicles, based on known co-occurrence patterns. This improves the chances of retrieving complete and relevant information.

Ranking based on term proximity

When processing multi-word queries, search engines give more value to documents where the query terms actually co-occur. If both entity A and entity B appear in the same webpage—and that pairing is rare or topic-specific—then the page may be ranked higher. Co-occurrence works here as a clue that the document focuses on the combined meaning, not just the separate parts.

Supporting content suggestions and search logs

Systems also use co-occurrence data from query logs to suggest related searches or autocomplete options. These suggestions reflect common patterns seen in earlier searches. For example, someone typing a digital camera might get suggestions like sensor size or lens aperture, based on how often those terms show up together in web content and past queries.

Role in SEO and content design

In search engine optimization (SEO), co-occurrence and co-citation are often discussed as ways to show topic relevance. If a page about digital cameras also includes related terms like shutter speed or ISO range, it gives search systems more context about the topic.

However, search engines do not simply rank pages based on word proximity. Modern algorithms consider many other signals beyond keyword co-occurrence. Still, co-occurrence can help writers identify which key terms appear in trusted or authoritative pages. This can guide better content structure and improve the natural flow of topic coverage.

What are the challenges and limits of using entity co-occurrence

Entity co-occurrence can create false links if terms appear together by chance. It also struggles with word meaning, context size, and vague relationships, so extra tools are often needed to filter and explain the patterns.

Distinguishing signal from noise

One key challenge in entity co-occurrence analysis is noise. Not all co-occurring entities are meaningfully related. In large corpora, some spurious pairs appear together simply by chance or due to broad topic overlap. This creates false signals. To avoid this, researchers apply significance thresholds or require repeated patterns across multiple sources.

Measures like PMI, LLR, and other statistical tests help filter out weak links. These tools compare actual co-occurrence against what would be expected randomly, highlighting non-random associations.

Context window size and scope

The definition of context matters a lot. If the window is too small (such as just a few words), it might miss longer-range links. If it is too broad (like an entire document), it may connect terms that are not truly related. Common options include sentence-level, paragraph-level, or document-level co-occurrence, depending on the goal.

Analysts also explore indirect relationships, where two entities do not co-occur directly but both link to a third one. These second-order or higher-order co-occurrences become weaker with distance but can still reveal structure in the data.

Ambiguity and entity disambiguation

Polysemous entities pose another problem. For instance, Mercury can mean a planet, a chemical element, or a mythological figure. Without clear labeling, co-occurrence data may mix unrelated meanings.

Using entity linking techniques to match each mention to a specific concept or node (such as Mercury (planet) vs. Mercury (element)) improves accuracy. This results in cleaner co-occurrence networks, where each node is more semantically consistent.

Limits of relational inference

Even when entities co-occur often, that does not always indicate a true semantic relationship. For example, two scientists may co-occur in articles due to comparison, citation, or shared topics—not because they collaborate.

To understand the relationship type, systems must go beyond frequency. This includes analyzing connecting words, using semantic role labeling, or consulting a knowledge base. Modern NLP pipelines treat co-occurrence as one feature among many, not as final evidence.

Practical tools and ongoing relevance

Despite its limits, co-occurrence remains central to text analysis. It is simple, scalable, and powerful in early discovery. It helps convert unstructured text into structured patterns, such as graphs, clusters, and topic maps.

Popular tools include:

- NLTK and spaCy (for entity extraction)

- NetworkX (for graph building)

- KH Coder (for mining and visualization)

- BlueGraph (used in biomedical research, including COVID-19)

References:

- https://www.sapien.io/glossary/definition/entity-co-occurrence

- https://docs.raga.ai/ragaai-catalyst/ragaai-metric-library/information-extraction/entity-co-occurrence

- https://en.wikipedia.org/wiki/Co-occurrence

- https://en.wikipedia.org/wiki/Co-occurrence_network

- https://aclanthology.org/D14-1162.pdf

- https://en.wikipedia.org/wiki/Pointwise_mutual_information

- https://aclanthology.org/W13-3503.pdf

- https://bluegraph.readthedocs.io/en/latest/tutorials/cord19kg_tutorials/getting_started_cord19kg.html

- https://ceur-ws.org/Vol-2306/paper9.pdf

- https://www.netowl.com/relationship-extraction

- https://ahrefs.com/seo/glossary/co-occurrence