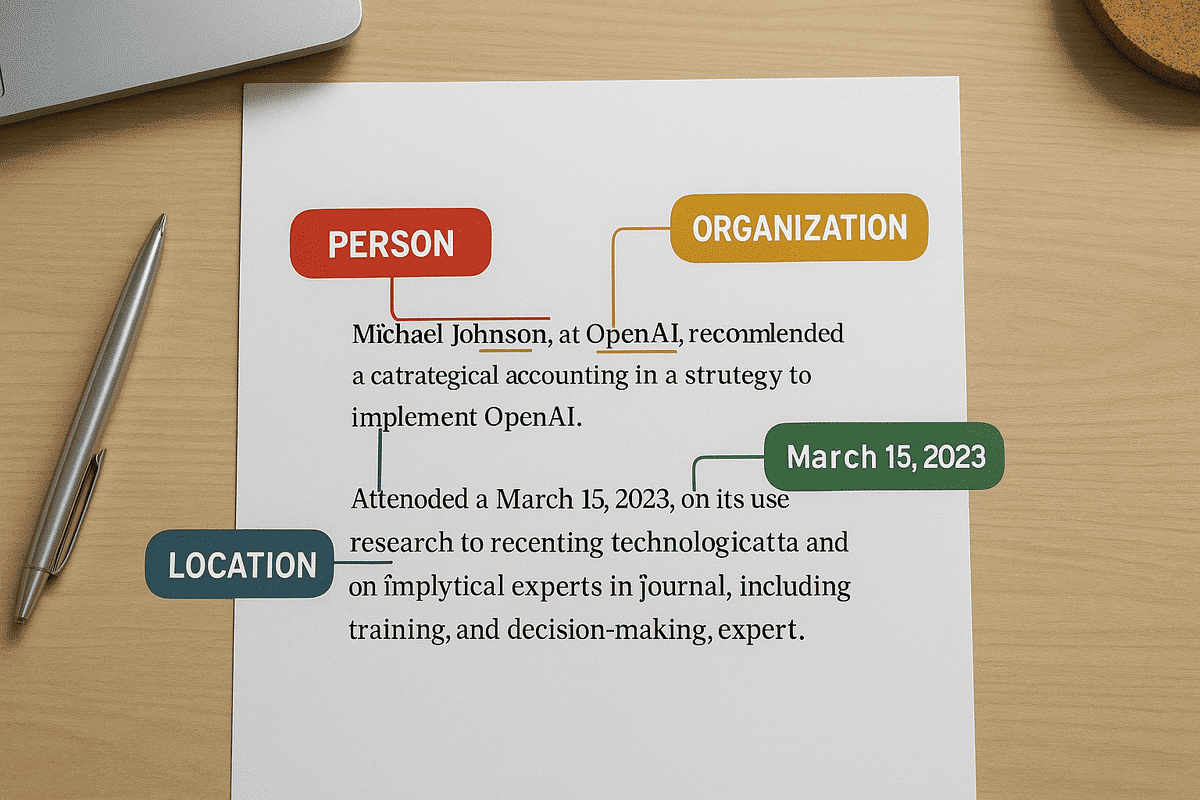

Named Entity Recognition (NER) is a task in natural language processing where a system finds and labels real-world names in text. It spots words like person names, company names, locations, dates, and money values, and tags them with clear labels.

NER helps turn plain, unstructured text into useful, machine-readable data. For example, in the sentence Alan Turing joined Google in June 2021, an NER system can mark Alan Turing as a Person, Google as an Organization, and June 2021 as a Date.

This step is often used in text classification, information retrieval, and automated question answering. It works by matching phrases to pre-set categories based on the meaning they carry. Most systems use linguistic rules, machine learning, or a mix of both.

Named entities usually include:

- People (e.g. Mahatma Gandhi)

- Places (e.g. Mumbai, France)

- Organizations (e.g. WHO, Infosys)

- Dates and time (e.g. 15 August, 2025)

- Monetary amounts (e.g. ₹5 crore)

It plays a key role in domains like legal tech, healthcare records, and financial analysis, where getting structured data fast is critical.

The term may also appear as entity recognition, entity extraction, or entity chunking, but the core task remains the same: label the key entities inside natural language.

By marking these entities correctly, NER acts as a bridge between text and meaning.

How Named Entity Recognition Works

NER works word by word. Each word is checked to see if it is part of a named entity. If yes, the system also marks what type of entity it is. This makes NER a token classification or sequence labeling task.

There are two main steps:

- Entity detection: This step finds the full phrase that forms the entity. For example, in the New York Times, the system understands the entire phrase is one unit.

- Entity classification: The system then labels the type. So, the New York Times is marked as an Organization.

To handle this, most systems use a tagging format called BIO:

- B (Begin): Start of an entity

- I (Inside): Rest of the entity

- O (Outside): Word is not part of any entity

For example:

Alan/B-PER Turing/I-PER joined/O Google/B-ORG in/O June/B-DATE

NER systems are trained to spot standard types like:

- Person (PER): Names of people

- Organization (ORG): Company or group names

- Location (LOC): Cities, countries, or regions

- Miscellaneous: Products, books, events, and more

Some tasks may include very detailed categories such as Law, Work of Art, or Medical Term, based on the domain.

There are three main ways NER systems are built:

- Rule-based: Uses fixed rules and name lists (called gazetteers) to find matches.

- Machine learning-based: Learns from examples in annotated text, and adapts to new data.

- Deep learning-based: Uses neural networks to learn patterns from character-level to full sentence level.

Today, most high-quality NER systems use deep learning because it handles new or rare names better than fixed rules. In the end, NER gives us labeled data from raw text, helping systems answer questions, search better, or understand documents faster.

History of Named Entity Recognition

Named Entity Recognition began in the early 1990s when researchers started looking for ways to automatically find proper names in text. A key milestone came in 1995 during the Sixth Message Understanding Conference (MUC-6), where NER was defined as a task focused on

detecting names of people, organizations, locations, dates, and numerical expressions in news reports.

Early Rule-Based Systems

In the 1990s, most NER tools used hand-written rules and gazetteers (lists of known names). These systems checked features like capitalization patterns or fixed phrases to find entities. While effective in news articles, they were hard to adapt to other types of text without rewriting many rules.

Expansion into New Domains

By the late 1990s, NER moved beyond newspapers. Researchers applied it to biomedical text (like gene or protein names), emails, chat data, and non-English languages. New work also started in multilingual NER, helping systems learn languages with no capital letters or complex word forms.

Machine Learning Era

A big shift came in the 2000s with the use of machine learning. NER models began to learn patterns from annotated corpora instead of relying on rules. Methods included:

- Hidden Markov Models (HMMs)

- Decision Trees

- Maximum Entropy Models

- Conditional Random Fields (CRFs)

CRF models, introduced around 2001, became popular for sequence labeling and improved accuracy on English datasets.

Shared benchmarks and tasks helped compare systems, but a known problem was domain adaptation. Models worked well on newswire data but performed poorly on unseen text types unless restrained.

Rise of Deep Learning

From 2015 onwards, deep learning changed NER again. Systems used Recurrent Neural Networks (RNNs) like LSTM and biLSTM-CRF, which could detect character-level signals (like common name endings) and long-range patterns in sentences.

A breakthrough came in 2018 with transformer-based models like BERT, which brought contextual embeddings. These models, pre-trained on large corpora, could be fine-tuned for NER with high accuracy and less feature engineering.

Multilingual and Fine-Grained NER

New tools like mBERT and XLM-RoBERTa made it possible to do NER in many languages using a single model. These models helped in low-resource languages, transferring knowledge from English and other well-annotated languages.

Work also grew in nested NER, where entities appear inside other entities (like a city name in a company name), and in fine-grained NER, which adds detailed types like law, product, or work of art.

Present Directions

By the mid-2020s, NER research focuses on:

- Low-resource NER: handling text with very few training examples

- Zero-shot NER: recognizing entities in new domains or languages with no labelled data

- Entity linking: connecting names to entries in a knowledge base

NER has evolved from simple rule-matching into a field driven by neural networks, transfer learning, and multilingual models, becoming a key part of modern NLP pipelines.

Concept and Methodology of Named Entity Recognition

The task of Named Entity Recognition is built around two main ideas: what counts as a named entity, and how the system finds and labels it. A named entity is usually a real-world name, like a person, place, or company. But in practice, NER also includes things like dates, monetary values, or product names, depending on what the system is built to handle.

Defining Named Entities

In general, a named entity refers to something that has a unique name. For example:

- Sachin Tendulkar as a Person

- Mumbai as a Location

- Reserve Bank of India as an Organization

Some systems also include:

- Dates and time expressions

- Currency amounts

- Book titles or product names

What counts as an entity depends on the task. A medical system may label gene names or chemical compounds, while a finance system might focus on company names and currency figures.

Entity Detection and Classification

NER usually has two steps:

- Detection: Finding where the entity starts and ends in the sentence.

- Classification: Labeling the entity with a type (like Person or Date).

Most systems do both steps at once using token-level tagging. They mark each word with tags like B (Begin), I (Inside), or O (Outside). This format helps the model understand where the entity is and what it means.

NER systems often assume:

- Entities are made of contiguous words

- Entities do not overlap

However, some advanced setups can handle nested entities or discontinuous spans, which need more complex models.

Rule-Based Methods

Early NER systems used rules and dictionaries. These matched patterns like:

- Words starting with Dr. or Mr. followed by a capital word (a likely Person)

- Known lists of countries or cities (for Locations)

These rule-based systems worked well in limited areas but were hard to update. Adding more rules often caused errors in other parts of the system.

Machine Learning Models

Later systems used statistical models trained on annotated corpora. These models learned from labeled examples and used features like:

- Capitalization

- Word position

- Part-of-speech tags

- Context words

Popular models included:

- Hidden Markov Models (HMMs)

- Maximum Entropy Models

- Conditional Random Fields (CRFs)

Machine learning helped systems handle new names not seen in training, but they still needed a lot of labeled data and did not work well in new domains without retraining.

Deep Learning Approaches

From 2015 onwards, deep learning became the main method for NER. These systems used:

- Word embeddings: Dense vectors that capture word meaning

- Character-level embeddings: Useful for rare or unseen names

Popular models were:

- Bi-LSTM with CRF: Looked at both sides of a word to understand its role

- Transformer-based models: Such as BERT, which improved accuracy using contextual embeddings

These models are often fine-tuned: a large pre-trained model is trained again on a smaller NER dataset. This improves performance, even with less data.

Deep learning models can detect more complex patterns but may act like a black box. Their decisions are harder to explain.

Hybrid and Semi-Supervised Techniques

Some systems combine rules with machine learning. Others use semi-supervised methods, which try to learn from unlabeled data. Newer systems also test zero-shot and few-shot learning, which help in tasks with very little or no training data.

Multilingual and Cross-Domain NER

NER was first built for English news text, but modern systems work across languages and domains. Each language poses challenges. For example:

- German capitalizes all nouns

- Chinese has no spaces between words

- Inflected languages change word forms

To manage this, new models use:

- Multilingual embeddings

- Cross-lingual transfer learning

- Translated training data

NER systems also need to adjust when moving from formal news to social media, legal text, or health records. These shifts need domain-specific training, or smart use of pre-trained models that can be adapted with less effort.

The core idea remains the same: NER turns words in natural language into structured data, so other tools can search, group, and understand the information easily.

Applications of Named Entity Recognition

Named Entity Recognition (NER) is widely used in both industry and research to extract structured information from unstructured text. By identifying names of people, places, organizations, dates, and other key terms, NER serves as a foundation for many advanced natural language processing tasks. Its ability to convert free text into labeled data makes it useful across multiple fields where human-readable documents need to be processed at scale.

Information Extraction and Knowledge Bases

NER is often the first step in larger information extraction pipelines. When a system reads raw text, identifying key entities allows it to fill or update knowledge bases or build knowledge graphs. For instance, scanning news articles for the names of public figures or companies can help form a structured list of records.

NER is also used in search engines and question-answering systems. If a user types “Where was Nelson Mandela born?”, the system can recognize “Nelson Mandela” as a Person and return the correct result. It can also highlight direct answers, like a location, from the response content.

Healthcare and Biomedical Text (Bio-NER)

NER plays a major role in processing clinical data. Known as Bio-NER, this version of NER is designed to work with medical vocabulary, which often includes chemical names, drug formulations, gene identifiers, and disease terms. Systems that analyze electronic health records, for example, can use NER to extract information about drug prescriptions, symptoms, or diagnosis terms. This structured output is then used for decision support, patient monitoring, or research curation. In pharmacovigilance, NER helps identify mentions of side effects or drug interactions from large volumes of reports or literature, supporting early detection of risks and treatment outcomes.

Finance and Market Intelligence

NER is also widely applied in the finance industry, where analysts need to track a high volume of documents such as news feeds, earnings calls, and regulatory filings. In this context, NER systems are trained to spot entities like company names, executive roles, stock symbols, financial figures, and geographic locations. For instance, a financial report may be processed to detect all mentions of a specific company, including relevant numbers or associated events. This helps in real-time market analysis, risk management, and even algorithmic trading. Additionally, financial NER can support compliance tasks by identifying flagged names or keywords in contracts, audit logs, or transaction records that need further review.

Legal Document Analysis

NER is used to extract structured data from legal texts such as contracts, court rulings, and regulatory documents. These texts often contain multiple references to persons, corporations, dates, legal citations, and jurisdiction details. NER allows software tools to automatically pull out this information, making it easier for lawyers or researchers to search, compare, and summarize legal content. For example, a system reviewing contracts can highlight all party names, key dates, and clause references. Similarly, legal search engines use NER to group case law by involved entities, helping users quickly find all cases tied to a specific organization or statute.

Beyond these core domains, NER has gained importance in social media monitoring, virtual assistants, and cybersecurity. On platforms like Twitter and online forums, NER helps brands detect mentions of products, services, or public figures in real time. Digital assistants such as voice-based chatbots rely on NER to understand user input—for example, identifying a movie name in a booking request or recognizing the city in a weather query. In security, analysts use NER to scan threat reports for indicators like IP addresses, malware names, or targeted organizations, which are then mapped to internal threat databases.

In all these applications, Named Entity Recognition acts as the first layer of structure in a sea of free text. By labeling the important names and values, it becomes easier to search, filter, analyze, and act on the data. Whether used in a hospital, a courtroom, a financial dashboard, or a chatbot interface, NER helps turn raw language into usable knowledge.

Challenges in Named Entity Recognition

Despite its wide use and steady progress, Named Entity Recognition (NER) still faces several limitations. These challenges arise from the nature of language, diversity in text formats, and the limits of machine learning models. Many of these issues are still active topics in natural language processing research.

Ambiguity and Polysemy

Words in natural language often carry more than one meaning. The same word can refer to different things depending on the context. For example, Jordan might refer to a person’s first name, a last name, or the country. Similarly, Apple may mean either the fruit or the technology company. In such cases, an NER system may misclassify the entity or miss it entirely.

Some names change type depending on usage. For instance, The White House may be treated as a Location (the building) but also represents an Organization (the US presidency). Resolving such cases often requires entity linking or background knowledge, which goes beyond standard NER tagging.

Variations in Naming and Spelling

In real-world text, entities often appear in different forms. A person might be mentioned by full name, only the surname, or even a nickname. A company could appear with its full name in one place and as an abbreviation elsewhere—for example, International Business Machines and IBM. NER systems must identify that these refer to the same entity or at least label them consistently.

Challenges also include misspellings, typos, and transliteration differences—especially when names come from languages with non-Latin scripts. Without strong context modeling or external knowledge sources, many systems struggle to detect and group these variations correctly.

Domain Adaptation Problems

NER models trained on one kind of text, like news articles, often perform poorly on others, such as social media posts or research papers. This is because language style, vocabulary, and entity types change from one domain to another.

To apply NER in new domains, systems need domain-specific data and retraining. For example, during the COVID-19 outbreak, new terms and organizations quickly emerged, and many systems failed to detect names like COVID-19 or WHO Task Force until updated. Preparing fresh labeled data for each setting takes time and resources, which slows down real-world use.

Multilingual and Low-Resource Challenges

While English NER has seen strong success, many languages still lack the data and tools needed for accurate recognition. Each language presents its own problems. Some do not use capital letters to mark names. Others, like Chinese, do not include spaces between words, which makes word segmentation essential before NER can be applied.

Languages with complex word inflection or compound formation further complicate recognition. Even though multilingual transformers like mBERT or XLM-RoBERTa have improved results, performance still drops for low-resource languages with limited training data.

Technical and Practical Limits

Deep learning models for NER often need high computing power. Training them takes time, and running them in real-time systems may not be efficient—especially on devices with limited resources, such as mobile phones or embedded systems.

Another key issue is model interpretability. In sensitive fields like law or healthcare, people may not trust outputs from a system that cannot explain its decisions. If the system tags a name incorrectly in a medical report or a contract, the mistake may have serious consequences. Ensuring that predictions are both accurate and explainable is an ongoing need.

Evolving Language and Emerging Entities

Language constantly changes. New names, slang, and terms appear regularly, especially in media and online communication. A fixed NER system will not recognize new startup names, public figures, or trending hashtags unless it is frequently updated or has the ability to generalize from contextual cues.

Keeping systems current requires constant updates or integration with live sources such as news feeds, social media, or knowledge graphs. Handling informal language, abbreviations, and shorthand—common in online chats or forums—is another open problem, especially when such examples were not part of the original training data.

These challenges show that even the best NER systems are not error-free. Mistakes such as missing an entity, assigning the wrong type, or merging nearby entities into one are still common. In critical applications, these errors may require manual review or error-tolerant systems downstream. To improve reliability, ongoing work includes transfer learning, weak supervision, crowdsourced annotation, and hybrid models that combine rules with machine learning to better handle complex or rare cases.

Impact and Significance of Named Entity Recognition

Named Entity Recognition (NER) has become a key tool in helping machines understand language. It works by pulling out names of people, places, and things from large amounts of text, turning them into structured data that other systems can use. Over time, NER has improved how search engines work, how knowledge is organized, and how text is processed across many fields.

Improving Search and Information Access

One of the most visible uses of NER is in search engines. When someone searches for a name, such as a city or a company, the system needs to understand that the word refers to a specific type of thing. NER helps the engine tag the word as a Location, Person, or Organization, and show better results.

For example, if a person searches for Delhi, the search engine can recognize it as a city, and show a map, travel details, or weather—without needing the user to type more. NER also supports question-answering systems, where the model must find and highlight the correct name in a document that answers the question.

Building Knowledge Graphs

NER plays an important role in building knowledge graphs. These graphs store facts by linking names to known entities. For example, Nelson Mandela is not just a string of text—it can be linked to a record with a date of birth, biography, and related people. This linking process, called entity linking, starts with NER tagging the name correctly in a sentence.

Projects like Wikipedia and other large databases often use NER to scan documents and pull out the names that become the nodes in these graphs. Once linked, this structured data can be used by virtual assistants, chatbots, and AI search systems to answer questions more accurately.

Supporting Automation in Workplaces

In industries like media, law, and healthcare, NER helps reduce the time spent reading or sorting documents. For example, journalists use it to tag articles with the names of people, places, or organizations, which makes it easier to group and search stories. This saves hours of manual tagging.

In the legal field, software can use NER to scan contracts and highlight key names, dates, or legal terms. In hospitals, NER systems can go through medical records to flag drug names, conditions, or symptoms, helping doctors review large files faster.

Advancing Research in NLP

NER has also pushed research in natural language processing. Since the 1990s, it has been part of shared tasks and global competitions where teams test their models on public datasets. These events help improve tools for new languages and fields.

The move from rule-based systems to deep learning changed how NER works. Models like Bi-LSTM-CRF and transformer-based tools like BERT now give better results with less manual work. These tools learn from context and can work across many kinds of text.

Role of Large Language Models

Recently, large language models (LLMs) such as GPT have introduced a new way to perform NER. Instead of training a model only for NER, users can now prompt the LLM to pick out names directly. This works well in settings where labeled data is limited, and it opens new ideas for how NER might work in the future—more flexible, less training-heavy, and able to reason over wider context.

References

- https://nlp.stanford.edu/software/CRF-NER.html

- https://en.wikipedia.org/wiki/Named-entity_recognition

- https://arxiv.org/abs/1812.06705

- https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7902993/

- https://www.sciencedirect.com/science/article/pii/S153204641930236X

- https://arxiv.org/abs/2003.04975

- https://www.sciencedirect.com/science/article/pii/S2666827022000190