Crawl budget is the limit set by a search engine for how many pages it will crawl on a website during a certain time. It depends on how much the crawler can crawl and how much it wants to crawl. This limit helps protect both the site’s server and the search engine’s resources.

Search engines like Google do not have unlimited time to check every page on the web. The web is too big for that. So each site gets a share of the attention—a set amount of crawling based on how important and healthy the site is.

If the crawl budget is used up on duplicate, broken, or low-value pages, important content may stay hidden or take longer to show up in search. For websites with thousands of pages, this can affect indexing speed and visibility in results.

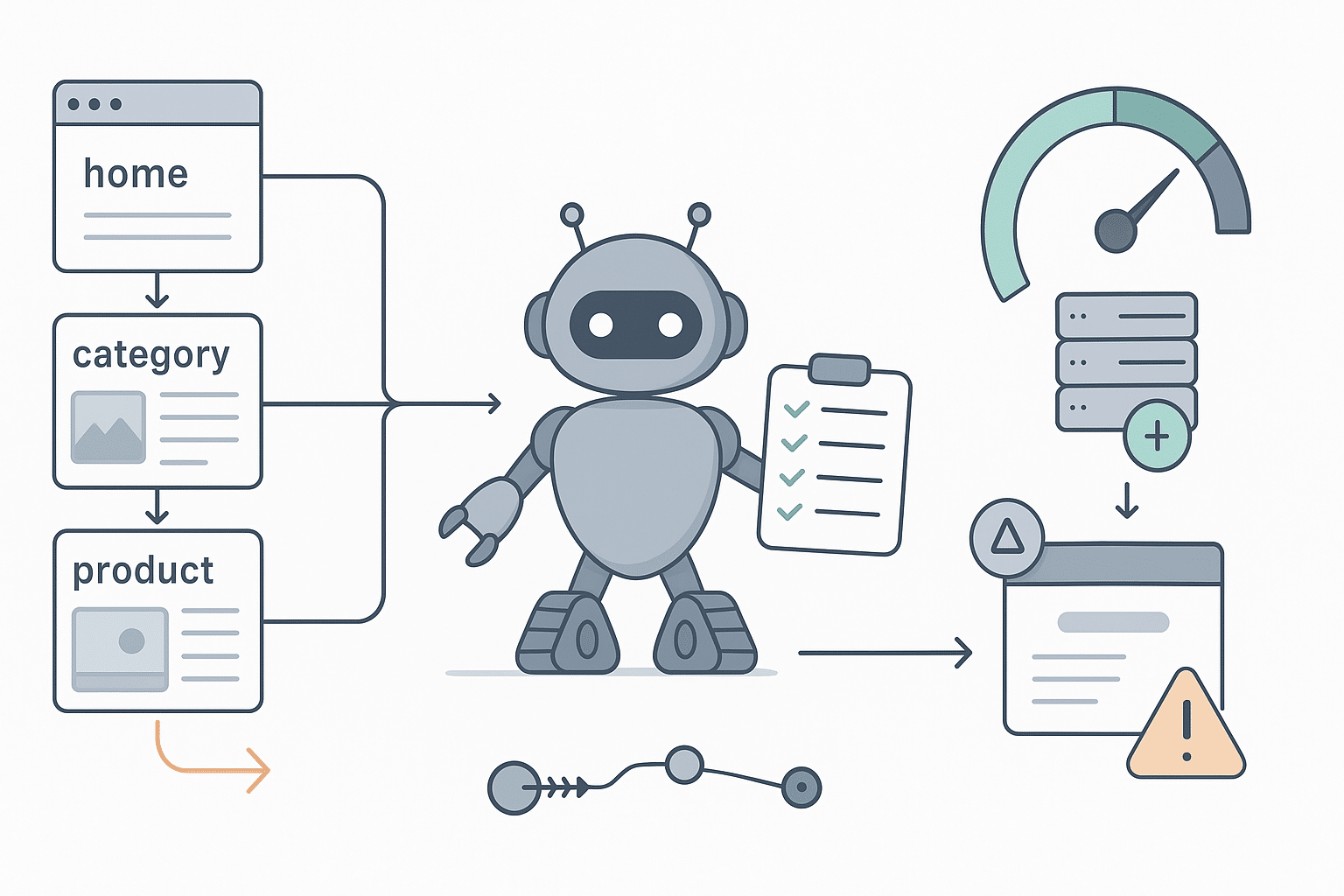

Efficient crawl budget use means making sure that bots focus only on the pages that matter—pages that are fresh, high quality, and meant to be found. SEO experts monitor and adjust site structure to guide crawlers toward these pages.

How crawl budget works and what affects it

A crawl budget depends on two main things: how much a search engine can crawl, and how much it wants to crawl. Google explains this as the set of URLs that Googlebot “can and wants to crawl” on a site. These two parts are called crawl rate limit and crawl demand.

Crawl rate limit

The crawl rate limit (also called crawl capacity) is the top speed at which a crawler will fetch pages from a website. This limit helps avoid overloading the site’s server. If a server responds fast and without errors, the crawler may go faster. If it slows down or shows errors, the crawler will reduce its speed.

This rate can be adjusted by the search engine depending on server health. Website owners can also suggest crawl settings using tools like Google Search Console or Bing Webmaster Tools. But they cannot force the search engine to go faster than its system allows. Since search engines have limited crawling resources across all websites, they must spread out their crawling capacity carefully.

Crawl demand

Crawl demand is how much the search engine wants to crawl a site. This depends on factors like how often the content changes, how many people visit the pages, and how important the site seems based on links or rankings.

Popular or recently updated pages are crawled more often. This keeps the search engine’s index fresh. Even if a page has not changed, the bot may still revisit it to avoid letting it go stale in the index.

Some big events, like a full redesign or a domain switch, can increase crawl demand quickly. The crawler will try to recheck the entire site to update its records. But if a site has many low-value or repetitive pages, the demand for those pages may drop over time.

Combined effect

The final crawl budget is shaped by both rate and demand. For example:

- If a site can handle fast crawling but has little fresh or useful content, the crawl volume stays low.

- If a site has many new or important pages but a weak server, the bot is limited by how fast it can crawl.

In simple terms, crawl budget is the balance between crawling capacity and crawling need.

What affects crawl budget on a website

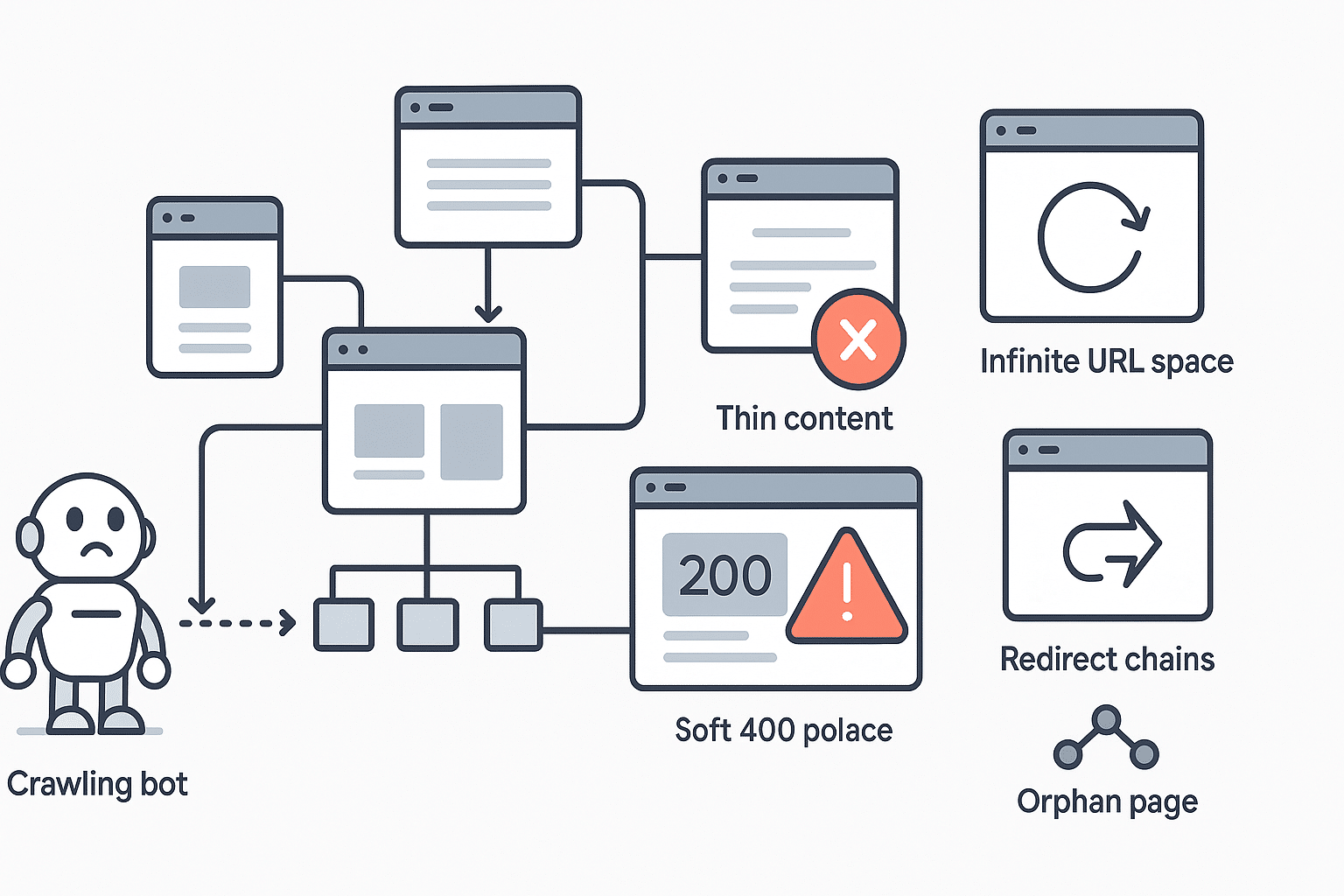

Search engines do not treat every page equally. Some URLs offer little or no value, and crawling them can waste the crawl budget. When a site has many low-value URLs, important pages may get ignored or crawled late.

Google lists several types of pages that reduce crawling efficiency:

-

Duplicate and faceted pages

Many e-commerce or large dynamic websites generate duplicate URLs using filters, session IDs, or faceted navigation. For example, links like ?color=red and ?color=blue might load nearly identical content. Crawling each version wastes budget without adding new value.

-

Soft error pages

A soft 404 is a page that loads normally but shows an error message or no useful content. Since it returns a success code, crawlers keep revisiting it. These pages should not be indexed, but they still consume crawl resources.

-

Infinite URL spaces

Sites with infinite scroll, calendar links, or auto-generated page chains may create thousands of URLs with no end. Crawlers can get stuck, using up budget on pages that add no value. Common examples include /page=2, /page=3, and so on, without a clear stop.

-

Spam or low-quality content

If a website has many spammy or low-quality pages, the search engine reduces crawl frequency. These pages are unlikely to rank or get indexed, so the bot avoids wasting time on them.

-

Hacked or junk pages

Websites with hacked content, such as gibberish pages or injected malware, also face crawl budget issues. The presence of such pages lowers the site’s perceived quality, and the crawler may shift attention away from the site.

Crawl waste and site health

If a large part of the site contains error pages, duplicate URLs, or junk content, the crawler spends less time on valuable content. This slows down the indexing of new or updated pages.

To reduce crawl waste:

- Remove unnecessary URLs

- Fix soft errors and broken links

- Limit filters and duplicate parameters

- Block infinite loops in pagination

- Keep spam off the site

Server performance and crawl usage

Server health also impacts how much of the crawl budget is used. Sites with many 5xx errors, frequent timeouts, or slow load speeds get crawled less. Search engines back off if a server looks unstable.

Keeping page speed high and error rates low helps bots fetch more pages. A smooth, fast-loading site can handle a higher crawl load without problems.

Redirect chains can also reduce crawl efficiency. Long redirect paths (e.g., A → B → C) cost more crawler time. Search engines prefer direct, short paths.

Crawl budget and search rankings

Crawl budget affects indexing, not ranking. A page must be crawled to be considered for indexing, but crawling more does not improve rank. Google uses many signals to rank content, and crawl frequency is not one of them.

The goal of crawl optimization is simple: make sure important content gets discovered and indexed quickly. That way, it shows up in search results without delay.

How different search engines handle crawl budget

The idea of crawl budget exists across major search engines, but each one handles it differently. Their bots follow similar principles—avoiding overload and crawling important content first—but the settings, tools, and behavior vary.

Google uses Googlebot, which adjusts crawl behavior based on the site’s speed and server response. Google does not let site owners set a specific crawl budget, but they can monitor activity in Google Search Console. This tool shows how many pages get crawled each day and lets users submit sitemaps or request specific URL crawls.

In older settings, Google allowed limiting the crawl rate, but it no longer supports manually increasing it. Googlebot ignores the crawl-delay directive in robots.txt. Instead, it changes its speed automatically depending on server health and response time.

Google states that small websites with only a few thousand pages rarely need to worry about crawl budget. The issue mainly affects very large sites or ones that generate many parameter-based URLs.

Bing

Microsoft’s Bingbot also manages crawling using a crawl budget model. According to Bing, it decides how much it can crawl without slowing down the site. Bing gives site owners more control than Google through Crawl Control in Bing Webmaster Tools.

With Crawl Control, owners can set when and how fast Bingbot crawls their site. For example, they can reduce crawling during peak hours and speed it up overnight. Bing also supports crawl-delay in robots.txt, which tells the bot to wait a certain number of seconds between requests.

Factors that affect Bing’s crawl budget include site size, content freshness, and how well the site is optimized. Sites with good internal links and little duplicate content are easier for Bing to crawl fully.

Other search engines

Search engines like Yandex and Yahoo follow similar rules. Yandex lets owners set preferred crawl hours and speed. Yahoo mainly uses Bing’s index, so its crawling patterns follow Bing’s approach.

DuckDuckGo does not operate its own full crawler. It pulls results from other indexes.

Baidu, China’s largest search engine, has its own bot with crawl limits, but public documentation is scarce. Like others, Baidu aims to avoid overloading servers while covering high-value content.

Overall, all bots follow the idea of being “good citizens” of the web. They balance crawl rate and demand, avoid crashing servers, and aim to use their crawling resources wisely.

Why crawl budget matters for SEO

For small websites with a few hundred pages, crawl budget usually does not pose a problem. Search engines like Google can crawl and index new content from these sites quickly—often on the same day. The site’s crawling capacity is rarely maxed out, so crawl management is not a major focus.

Google states that crawl budget becomes relevant mainly for large websites with thousands of URLs or more. These include e-commerce stores, news platforms, or user-generated content sites. In such cases, not all pages get crawled or updated regularly. Some sections may remain undiscovered for weeks or longer.

A 2020 study showed that Googlebot missed about half of the pages on large enterprise sites. This gap highlights a major risk: if a page is not crawled, it cannot appear in the search index, and therefore cannot bring traffic. Crawl budget optimization becomes crucial for protecting visibility in search results.

Crawlability versus crawl budget

Crawlability and crawl budget are closely linked, but they are not the same. Crawlability means that the page is technically reachable by a crawler—it is not blocked by robots.txt, login walls, or noindex tags. If a page is not crawlable, it gets no crawl budget at all.

Once a page is crawlable, it starts competing for the site’s crawl budget. Search engines choose which pages to prioritize. Important and updated pages are usually fetched first, while older or low-value pages might be crawled less often or not at all.

For SEO, both steps are vital:

- Fix crawlability issues to make sure key pages can be accessed

- Optimize crawl budget to ensure bots focus on high-value pages

For instance, a high-priority news update might get crawled within minutes. But an old blog post, even if technically crawlable, could be ignored for months.

SEO performance impact

Managing crawl budget well means more of your important content is available in Google’s index. That leads to better organic visibility and faster updates in search results. Sites that waste crawl budget—by allowing bots to fetch unnecessary or duplicate URLs—risk losing ground in search.

Sites with poor technical SEO—such as broken links, slow servers, or deep page structures—may also limit how much the bot can crawl. That makes it harder for search engines to keep up with the site’s best content.

How to optimize crawl budget effectively

Managing the crawl budget means helping search engines crawl more useful content with the same amount of effort. This is not about getting more crawling, but about making the most of what you already get.

If your site has duplicate URLs or thin pages, crawlers may waste time on content that adds no new value. Use canonical tags or merge similar pages. If some duplicates must stay (like filter links), block them using robots.txt.

Note: URLs blocked in robots.txt may still appear in search if other sites link to them, but they will not be crawled. So only block URLs that truly do not belong in search results.

Fix error pages

404 and 410 errors should be cleaned up. Googlebot rechecks even known broken URLs, which wastes the crawl budget. Make sure broken links are removed or updated, and that the correct status code is returned.

Avoid soft 404s—these are empty pages that return a 200 OK. Either redirect them to a valid page or return a proper error code.

Use sitemaps and structured hints

An XML sitemap helps crawlers find new or changed content. Include <lastmod> dates so bots know which pages are fresh. While sitemaps do not guarantee crawling, they give strong hints to bots.

You can also use tools like Google’s URL Inspection Tool or Indexing API (for eligible content) to request faster crawling of key URLs.

Control URL parameters

Tracking codes or filter links often create too many parameter-based URLs. If not managed, crawlers may try every version and waste time.

Use canonical links to tell the bot which version is preferred. You can also block specific URL patterns using robots.txt, especially if they lead to infinite variations.

Avoid designs like open-ended calendar URLs, which generate a new page for every day forever.

Improve site speed

Fast sites are crawled more often. When servers respond quickly and stay stable, bots can crawl more pages in the same time.

Use compression, fast hosting, and clean code (CSS, JS) to reduce load time. If your server gets slow during heavy crawling, you can throttle bots like Bing using Crawl Control or crawl-delay in robots.txt.

Monitor crawl reports

Use Google Search Console’s Crawl Stats to track Googlebot activity. Look for dips in pages crawled or spikes in error rates.

Also check your server logs. They show which parts of your site get crawled the most and which get missed. If crawlers are wasting time on low-value pages, adjust internal links or crawl directives to shift their focus.

SEO impact

By removing crawl waste, guiding bots toward key content, and fixing technical issues, you improve crawl efficiency. This increases the number of important pages that appear in the index—without needing a bigger budget.

References:

- https://developers.google.com/search/docs/crawling-indexing/large-site-managing-crawl-budget

- https://www.botify.com/blog/crawl-budget-optimization

- https://searchengineland.com/guide/crawl-budget

- https://developers.google.com/search/blog/2017/01/what-crawl-budget-means-for-googlebot

- https://searchengineland.com/bing-crawling-indexing-and-rendering-a-step-by-step-on-how-it-works-307592

- https://chemicloud.com/kb/article/crawl-delay-in-your-robots.txt-file/